Metis AIPU: AI Acceleration at the Edge

Metis AIPU: AI Acceleration at the Edge

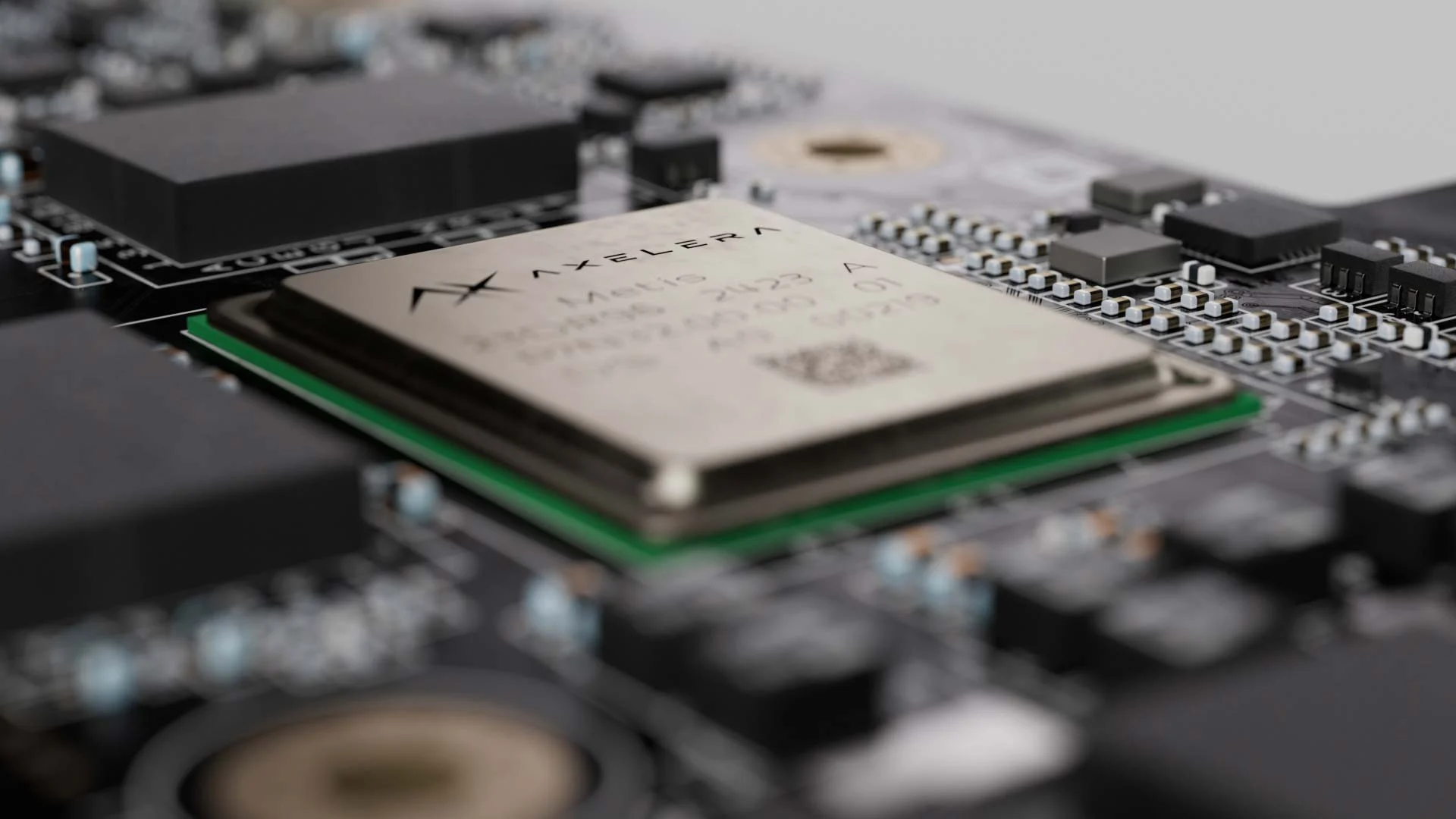

What it is. The Metis AI Processing Unit (AIPU) is designed specifically for edge computing, combining high computational efficiency, scalability, and seamless deployment. Its in-memory compute architecture enables real-time inference on devices at the edge, reducing reliance on cloud interactions.

Key capabilities.

-

Performance efficiency: single-chip 214 TOPS, industry-leading TOPS/Watt, and scalable across devices.

-

In-memory computing: minimizes data movement, latency, and energy usage—ideal for real-time vision tasks.

-

Edge-ready design: compact, low-power footprint for embedded systems across smart cameras, industrial automation, retail analytics, robotics, and more.

Use cases. Smart Surveillance (real-time event analysis), Industrial Automation (on-device model deployment), Retail Intelligence (in-store analytics and shopper behavior), and Smart Cities & Mobility (ADAS, traffic monitoring, and more).

Metis Product Line

Metis M.2 Card — Compact Power for Embedded AI

Delivers full AI inference acceleration in an ultra-compact format for space-constrained and fanless edge devices.

Key features

-

Form factor: M.2 2280 B+M key

-

Performance: up to 214 TOPS @ ~8 W

-

Interface & fit: direct integration into embedded designs; suitable for Linux-based systems, smart cameras, drones, and robotics

-

Highlights: small thermal footprint and easy drop-in integration for AI on the move or in tight enclosures

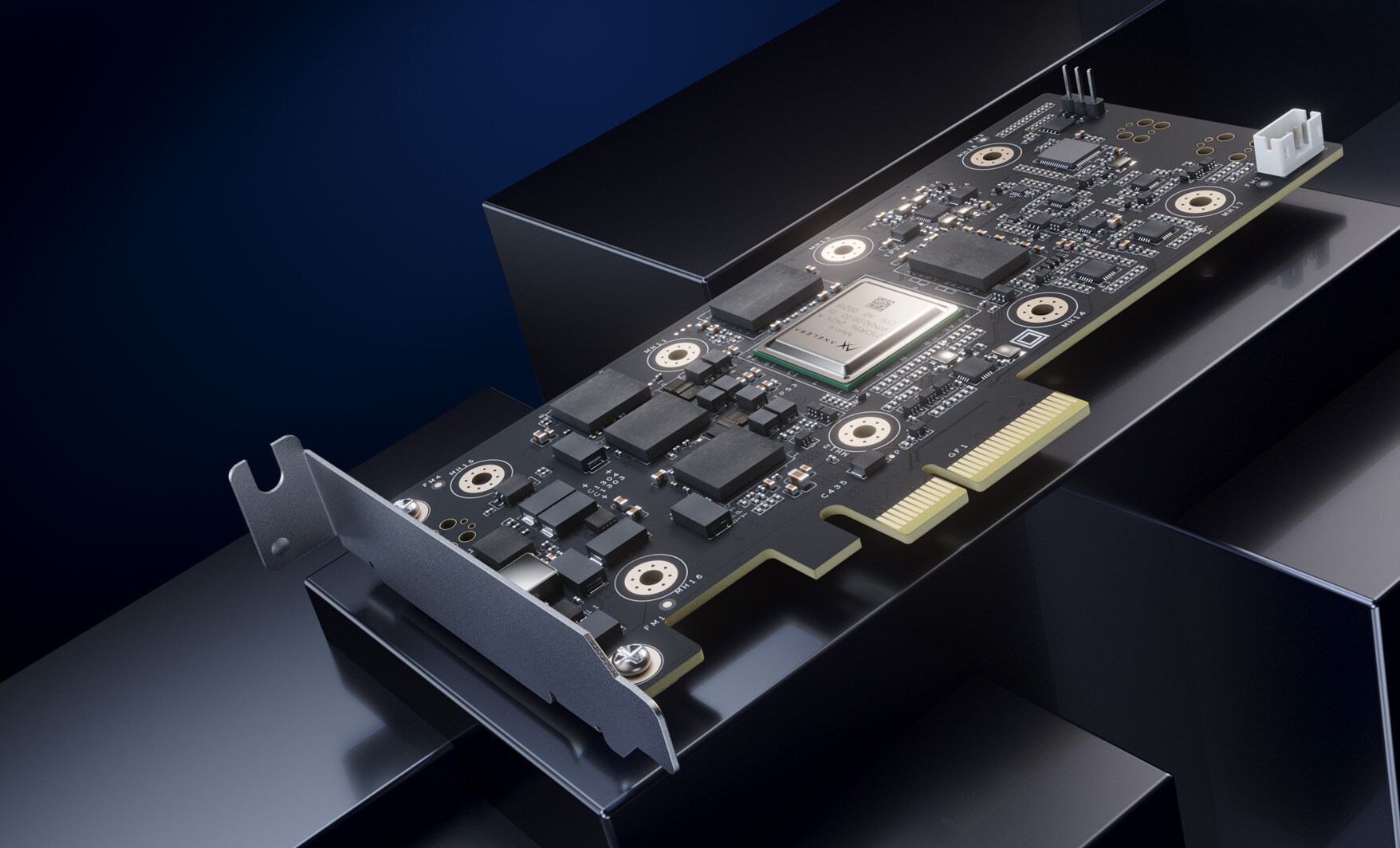

Metis PCIe Card — High Throughput for Edge Servers

A high-performance, plug-and-play accelerator for desktop, server, or industrial edge environments.

Key features

-

Form factor: HHHL (Half-Height, Half-Length)

-

Interface: PCIe Gen4 x8

-

Performance: up to 214 TOPS @ ~15 W

-

Scalability & fit: multi-card support for higher throughput on x86 edge servers, workstations, and industrial PCs

-

Highlights: exceptional TOPS/Watt for smart cities, industrial vision, and real-time analytics

Metis Compute Board — Integrated Compute for Custom Systems

A compact compute module integrating the Metis AIPU with required I/O, optimized for deep integration into custom edge devices. Suited to OEMs and embedded system designers building for machine vision, industrial robotics, and smart infrastructure.

Metis Evaluation Systems – Accelerate AI Prototyping

Axelera’s Metis Evaluation Systems provide a ready-to-use platform for developers to explore and benchmark the power of the Metis AIPU in real-world scenarios.

Includes:

-

Metis PCIe Card or Metis M.2 Card (depending on configuration)

-

Reference host system or integration board

-

Pre-installed Voyager SDK

-

Tools for model import, optimization, and deployment

Use Cases:

-

Proof of concept (PoC) development

-

Model benchmarking and testing

-

AI workload evaluation across edge use cases

Voyager SDK by Axelera AI

As AI applications move increasingly to the edge, developers face the challenge of deploying powerful models on resource-constrained devices — without compromising accuracy or speed. The Voyager SDK from Axelera AI solves this challenge by offering a fully integrated software stack designed to accelerate and simplify the journey from AI model development to deployment on Metis AIPU-powered platforms.

Key Features of Voyager SDK

End-to-End Toolchain

Voyager SDK supports every stage of the AI lifecycle, including:

-

Model conversion from frameworks like TensorFlow, PyTorch, and ONNX

-

Model quantization and optimization for edge inference

-

Automated compilation for Metis AIPU hardware

-

Edge runtime environment for low-latency inference

Optimized for In-Memory Compute

Voyager SDK is purpose-built to extract maximum performance from Axelera’s Metis AIPU, leveraging its in-memory compute architecture to:

-

Minimize data movement

-

Reduce energy consumption

-

Deliver ultra-low-latency inference

-

Achieve high throughput for vision workloads

Developer-Friendly Experience

Voyager SDK simplifies edge AI development through:

-

Command-line and API access for automation and scripting

-

Integrated profiling and debugging tools

-

Clear performance metrics and optimization insights

-

Support for containerized workflows (e.g., Docker)

Flexible Model Input & Compatibility

Voyager offers wide compatibility with common frameworks and formats:

-

ONNX, TensorFlow, PyTorch

-

Pre-trained and custom models

-

Batch processing and streaming inference

-

Easy integration into CI/CD pipelines